It may seem obvious that software is complicated. But whether you’re a programmer or not, it’s probably more complicated than you think it is. I recently reread Fredrick Brooks’ The Mythical Man-Month, now over 30 years old but still remarkably relevant. Brooks warned about how easy it is to think software is not as complex as it really is, and the field of software development hasn’t changed much in this respect.

Imagining a piece of software and what it can do, and making it a reality, are of course two very different things. For programmers, Brooks warned about the tendency to think a piece of programming will be easy to do when thought about in the abstract—in his words, the tendency to assume “all will go well”. If a feature is easy to imagine, our instincts tell us, it can’t be too hard to implement. This tendency affects even the most experienced and cynical developers. You want to think it’ll be easy, everyone else wants you to tell them it’s easy, you can’t think of any concrete problems, and so you underestimate the complexity.

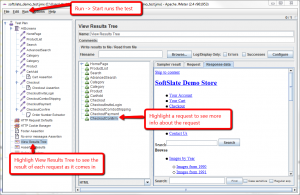

Non-technical folks struggle even more to grasp software’s complexity. In addition to suffering from the same instincts to believe “all will go well”, they don’t have the benefit of experience that comes with grappling with software first-hand. An entertaining thread on slashdot lists a number of techniques techies have devised to explain software’s complexity to non-techies. My favorite is still the car analogy. Say a typical car has 10,000 parts that must fit together and work in harmony for the machine to run. SoftSlate Commerce, the Java shopping cart application I developed over the course of the last four years, has well over 50,000 lines of code, each of which must fit together and work in harmony for the application to run. Further, the vast majority of those lines of code is unique, whereas in a car, many basic parts such as nuts and bolts are duplicates. (In contrast, duplicate lines of software code are usually abstracted into a subroutine, eliminating the duplication.) I will grant there are plenty of issues involved getting a car made in the physical world. But as a measure of complexity alone, I think the analogy is useful.

In addition, I suspect that for non-technical people, using popular desktop applications colors their impressions of software in general. If you’re asking for an enhancement to a custom application you use in your business, and your main experience with software is Microsoft Word, or Firefox, and so on, you’ll think of analogies to what you’re asking for in those applications. In your experience, the feature doesn’t seem too complicated, because you use something like it in these other applications and it works flawlessly (most of the time). But you may not realize that tens of thousands of hours were spent writing the applications you’re familiar with, hundreds of millions of dollars were spent on their budgets, and, yes, even the simplest features to use are extremely complicated.

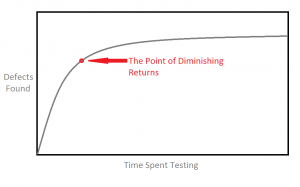

Another insight Brooks touches on is the fact that as a software program gets larger, its complexity gets larger exponentially. The first 1000 lines of code may take two days to write. Lines 99,000 to 100,000 will surely take longer, since it’s likely they will affect a number of the program’s different aspects and introduce side effects that have to be anticipated and tested against. Certainly I’ve felt this effect and all its pain first hand. In one customization of SoftSlate Commerce, quite a number of custom features related to the core order processing have been added over the years. With each new requirement, we must now take into account how it might affect every single other feature, to ensure we don’t break something.

So software is complicated. So what do we do? There are a number of good things you can do to reduce the impact of software’s complexity, but nothing you can do to eliminate it. First and foremost is to employ modularization. If you could divide a software program into independent modules, you could eliminate the exponential effect described above, where new features take longer and longer to implement. In the Java world, the term “decoupling” identifies the same concept. If you could sit around writing completely independent pieces of code and then use a framework to put them together, your job would certainly be much simpler.

Problem is, you can never, ever achieve pure modular separation without sacrificing control. It’s just not possible, no framework is up to the task. In my early career, I worked a lot with the Miva Merchant shopping cart application, and I learned how to write Miva Merchant “modules”. Their framework was reasonably good, with a decent API for writing modules of different types, such as payment processors, shipping processors, and so on. Problem is, it wasn’t enough. Too often you wanted to hook into this screen over here to display something, and then this piece over there to process some new user input, and so on. A sort of uber-module came along called Open UI, which, when installed over Miva Merchant, provided you all sorts of these hook points to make more granular modules. Sure enough, all sorts of “Open UI” modules came along. The opposite problem soon surfaced, however, where the modules attempting to modify the same areas of the core application would interfere with each other. One module would say, “I want to add a new input box here”, and another module would say “No, I want to display a fancy table here instead”. It made the process simpler to an extent, but then a new category of particularly hellish and complicated disasters came along.

One modern answer to modularization, Aspect Oriented Programming, I fear falls into the same category: it helps, but it doesn’t solve the problem by any means. In Java using Spring 2.0 or AspectJ, you can use an AOP framework and write “aspects” that can hook into the beginning and end of every method the application runs. It’s a great tool for modularization, but don’t believe for a second you won’t run into cases where one aspect interferes with another.

At this point you might say, screw it, I don’t want complicated software, so let’s just write software that has fewer features. Ah, yes, now you have hit on a true solution to the problem, but I’m not sure how satisfying it is: avoid complicated applications by refusing to develop features for them. Sure enough, someone’s already put this concept into practice, the web development firm 37signals, who claim their products “do less than the competition—intentionally.”

I have to admit it’s an intriguing approach. It’s true that too much software is “too complicated”, even if all of its features were deemed necessary at one point in time. Remember that the tendency is for the users asking for the features, and the programmers actually developing them, both to underestimate the complexity of software. When that’s the case, inevitably features that have no business being attempted are forced into an application. If folks would stop and really appreciate the complexity of what they’re contemplating, often common sense would convince them to just move on and try something else. Or to explore ways to work *with* the software, instead of asking the software to work *for* them.

Of course from my perspective refusing to develop features is a form of a professional cop-out, and it certainly doesn’t help if your particular feature really is essential to your business, regardless of how much more complicated it makes the software. Joel Spolsky’s essay on simplicity is a good rebuff of the 37signals credo. Would that we could all live in a world where reasonable users had reasonable needs, and asked programmers for reasonable features that made their applications only reasonably complicated. Alas, I don’t live in that world, not yet anyway. Maybe once I buy that boat, sell all my other belongings, and live the simple life on the open seas I will.